I Over-Engineered a Solution to My Steam Backlog. No Regrets.

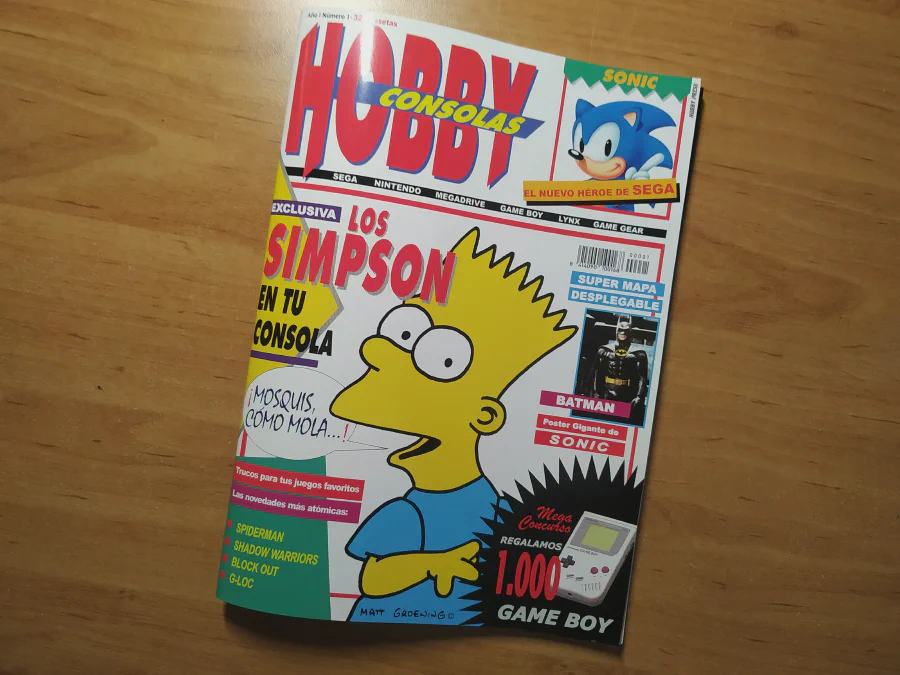

As a kid, I grew up reading video game magazines, primarily the legendary Spanish publications Hobby Consolas and Playmania. It was a tradition to stop by the newsstand on my way to school and ask the owner if they had already received the latest Hobby Consolas or Playmania, as well as the official NBA magazine. I spent hours reading the reports and the news sections. However, the section I enjoyed the most by far was the game analysis. An article explaining the gameplay and evaluating a title based on pre-established criteria, resulting in a final score, was enough for me to decide whether a game was worth playing. This was actually more important to me than playing the games themselves since new releases were largely inaccessible to me (except on specific dates like Christmas). So, reading the analysis was the closest thing to playing a game in the pre-YouTube era, before the days of walkthrough videos and gameplay streams.

Well, what I just explained is perfectly exemplified by the post I wrote about MMORPGs for people over 30. In that post, I included the games of the genre that I had played, established some criteria and a scoring system, and organized it in a way that made the result of my evaluation visible via a tier list. Yes, I did exactly what magazine columnists used to do, while respecting the proper proportions and quality.

Decades have passed since those days, and things have changed. Game scores now appear on websites and video reviews on YouTube, physical written media has become something of a cult interest, and my access to games has shifted drastically. While buying games used to be a rare event involving an exhaustive analysis of opportunity cost, today, thanks to stores like Steam, it has become easy for me to accumulate games. Let’s just say I have probably gone a bit too far. As of writing this article, my Steam library has accumulated 1047 Games over the last 10 years. Yes, it is bizarre. And no, I haven’t played even half of them.

The Idea

From this desire to analyze games and the wish to get the most out of my game library, the following idea emerged: what if I curated all the games I own? As a mathematical matter of time, it is unlikely I will ever play all these games. Considering work, courses, and a future heir on the way, this improbability tends to become increasingly impossible.

With this in mind, I remembered what made the analysis in those magazines so unique: criteria. Defining points and following them in an analysis turns your opinion from a vague feeling into something tangible. Yes, scores in the game industry can be a hollow and problematic way to evaluate games if taken as the sole mechanism. However, without a minimum standard, an opinion becomes mere guesswork.

This desire resulted in a project: a curation performed by Artificial Intelligence, using criteria personalized by me in an artisanal way, which evaluates my entire Steam library and classifies games via tier lists separated by subgenres. I named the project .2miu Curator.

In this post, I will explain how I did it, the struggles, and the numerous problems of a silly weekend project.

The Stack

Developer section alert!

Let’s address the fullstack elephant in the room and talk about the technology used. For this project, I used Go as the main backend language and SolidJS for the frontend. Those who follow me know that this is my current standard stack. No, I will not evangelize about technology because that is for ignorant university students. What I can say about Go is that it is highly performant, basically only losing to Rust and C++, which is something to highlight per se. But beyond that, I used the feature that justifies the existence of Google’s language in this project: goroutines. Yes, many languages work with threads, but Go raised this to another level of efficiency. While handling concurrency in other languages often feels like a complex workaround or a heavy burden on memory, in Go, goroutines are first-class citizens. They allow you to scale thousands of simultaneous processes for the memory cost of a cup of coffee. Of course, in this project, we won’t reach the extreme of thousands of requests, but as I said, it is already my standard language and I need to work with concurrency, so it was a match!

Regarding the frontend, I opted for SolidJS, which is also part of my standard performance stack. I could argue that I did this to improve page loading by X seconds with mind-blowing metrics, but in reality, I chose it because it is what I use, or rather, what I chose to use.

For the database, I used MongoDB for the sake of simplicity. The architecture and objective of this project were screaming for SQLite to be used, but I ended up sacrificing reason for convenience. It happens, right?

Initially, this was going to be a desktop application using Wails as a layer between the backend (Go) and frontend (SolidJS). However, I am currently using Hyprland as my graphical environment on ArchLinux. Anticipating possible errors with peripheral technologies, I decided to simplify and leave the application as a Web app. Why didn’t I use Electron? I hate everything made with Electron. From my stack, you can tell how much I value performance. Running Chromium just to show a screen is not performant. There is also Tauri, which I have never touched, but it seems to be more in the style of Wails than Electron. Of course, the base is made in Rust, so it makes perfect sense.

The Project

Leaving the technological stack aside, the .2miu Curator project has a very simple flow:

The command curator sync triggers the Steam API and queries the endpoint /IPlayerService/GetOwnedGames/v0001/, which is quite self-explanatory, and populates my MongoDB database.

With the database populated, a second command curator analyze starts the analysis of each game using the genre parameters I will explain later, and populates the MongoDB collection called analyses.

The games are displayed on the frontend, which is connected to a second backend to query the internal APIs (GetGames, Search, etc.).

Technically, it is extremely simple, right? Any programmer looking at this flow could replicate it without a problem. So what makes this project unique? There are 3 points, and I want to explain them one by one.

Point 1: The Curation

As I said, the goal of the project is a game curator via AI that analyzes games WITH MY CRITERIA. Without this, it would be a project of 5 or 10 minutes at most, nothing more. But it would be something totally flawed as the AI would lack parameters to evaluate the games. It would receive the game information (name, steam score, description, etc.) and analyze each game in the most freestyle way possible.

One of the things I liked about my article on MMOs was the way I customized what I expect from an MMO. Not what I expect from a good game. What I expect from a good MMO. Unique things like “horizontal progression” or “leveling” are specific to that genre. If I evaluated an MMO with fixed categories like “Graphics”, “Sound”, “Fun”, etc., the result would be an absurd list. I am sure that in this scenario Black Desert Online would be TIER S, and reality is far from that.

I wanted something personalized by genres.

However, more general genres are difficult to unify under one criterion. For example, RPG is a genre that ranges from Soulslike to Looter RPG, passing through CRPG and Dungeon Crawler.

It was at this point that the weekend project lasted an entire weekend, and not just 10 minutes.

I went genre by genre, reviewing subgenres and creating 5 analysis parameters that the AI should follow for each one. In the end, 84 subgenres were evaluated. Since each subgenre had 5 analysis categories, it resulted in a total of 420 evaluation categories that I created manually. Yes, I could have asked an AI to do this, but if I had, the project would have made no sense. I want Deepseek to evaluate according to my criteria. Basically, I established the editorial line and hired the journalist (AI) to do the reviews.

Yes, it was a titanic job, but fun. All these criteria were included in a file called subgenres.yml, as shown in the example below:

"Metroidvania":

name: Map Design

desc: Is the world interconnected elegantly? Are shortcuts satisfying?

name: Ability Impact

desc: Do new powers meaningfully change movement and combat?

name: Combat Depth

desc: Are boss fights challenging? Is there skill expression?

name: Exploration Reward

desc: Is backtracking fun? Are secrets worth finding?

name: Guidance Balance

desc: Is the "getting lost" factor balanced with clear objectives?

Why do I want to know if a Metroidvania has Graphics, Sounds, and other technical aspects? No! I want to know if the Map Design is good, if new abilities change the way you fight, if the game is intuitive regarding guidance or if you get lost inside it.

When Deepseek evaluates a Metroidvania game, it will question what I wrote and will not draw conclusions from its standard randomness.

Point 2: The Prompt

Since the analysis will be performed via AI, we need two important things: to know the game’s genre and to send my criteria for that genre.

Here begins the first challenge. Steam categories are chosen by the game owners, and there are multiple categories. For example, Diablo 4 is an Action RPG, specifically a Looter ARPG, whereas Elden Ring is an Action RPG, but a Soulslike in this case. But if we go to Steam, we see that Diablo IV is: Action RPG, Hack and Slash, Loot, Isometric, RPG, Online Co-Op, Dungeon Crawler, Single-player, Co-op. Ten categories to define what Diablo IV is. I cannot manually analyze the category of each game because, as I said, we are talking about more than 1000 titles.

The approach here was to send the maximum amount of text information about the game to Deepseek, as well as the tags and genre listing, and let the AI evaluate what the best subgenre is for that game. Since it is a simple task, I used the deepseek-chat model, which is the most basic one. I set the temperature to 0 because I don’t want creativity, I want the judgment of a librarian.

Once the chosen genre returns, the game analysis is performed, this time with deepseek-reasoner, the one that “thinks” to create an extra layer of reliability regarding the information. Deepseek will analyze the game with the criteria of a specific genre.

In this second prompt, I had to take some precautions:

Temperature = 0. The same thing applies here. I don’t want creativity, I want rigor.

Evaluation tone. AIs don’t like to criticize things. That is why for games with <50% reviews I was specific with the AI: Destroy the game. If it is a masterpiece, celebrate the game. Is it run-of-the-mill? Conclude the review with a positive and a negative point.

Be consistent with the genre. Farming games don’t aim to have realistic Unreal Engine 5 graphics. Business simulator games are not law simulators. I had to make several corrections during the initial tests.

Send Steam information. Description, Steam Reviews, Tags, etc. Extra material for the AI to analyze.

The result? A game with a defined subgenre and 5 evaluated criteria.

Call Example I - Prompt for genre selection (DeepSeek Chat)

{

"model": "deepseek-chat",

"temperature": 0,

"max_tokens": 500,

"messages": [

{

"role": "system",

"content": "You are a strict librarian. You classify games into a fixed set of specific micro-genres.\nContext: You will receive game details and a List of Allowed Genres.\nTask: Identify the SINGLE Allowed Genre that best matches the game.\n\nRules:\n1. Output MUST be a valid JSON array containing EXACTLY ONE string.\n2. The string MUST BE AN EXACT COPY from the Allowed Genres list provided.\n3. Do NOT invent new genres. Do NOT use Steam tags that are not in the Allowed Genres list.\n4. Select exactly 1 genre.\n5. If absolutely none of the Allowed Genres fit, return [\"Uncategorized\"]."

},

{

"role": "user",

"content": "## GAME DETAILS\nTitle: Diablo IV\nDescription: Return to darkness. The endless battle between the High Heavens and the Burning Hells rages on as chaos threatens to consume Sanctuary. With ceaseless demons to slaughter, countless abilities to master, nightmarish Dungeons, and Legendary loot, this vast, open world brings the promise of adventure and devastation. Survive and conquer darkness—or succumb to the shadows.\nTags: [Action, RPG, Hack and Slash, Loot, Multiplayer, Dark Fantasy, Open World, Character Customization, Co-op, Online Co-Op, PvP, Replay Value, Story Rich, Atmospheric, Action RPG]\n\n## ALLOWED GENRES LIST\nYou MUST select ONLY from the genres listed below. Do not use Steam tags.\n\n[\"Tactical RTS\", \"Grand Strategy\", \"4X Strategy\", \"Turn-Based Tactics\", \"Real-Time Tactics\", \"Colony Sim\", \"City Builder\", \"Tower Defense\", \"Auto Battler\", \"Soulslike\", \"Looter ARPG\", \"CRPG\", \"JRPG\", \"Tactical RPG\", \"Creative Sandbox\", \"Open World RPG\", \"Open World Action\", \"Dungeon Crawler\", \"Metroidvania\", \"Roguelike\", \"Roguelite\", \"Character Action\", \"Hack and Slash\", \"Beat em Up\", \"2D Platformer\", \"3D Platformer\", \"Boomer Shooter\", \"Arena Shooter\", \"Hero Shooter\", \"Tactical Shooter\", \"Extraction Shooter\", \"Immersive Sim\", \"Looter Shooter\", \"Military Sim\", \"MMORPG\", \"Battle Royale\", \"MOBA\", \"Survival Craft\", \"Life Sim\", \"Farming Sim\", \"Management Sim\", \"Tycoon\", \"Vehicle Sim\", \"Flight Sim\", \"Space Sim\", \"Arcade Racing\", \"Sim Racing\", \"Kart Racing\", \"2D Fighter\", \"3D Fighter\", \"Platform Fighter\", \"Survival Horror\", \"Psychological Horror\", \"Horror\", \"Puzzle Platformer\", \"Puzzle\", \"Visual Novel\", \"Interactive Fiction\", \"Walking Simulator\", \"Point and Click\", \"Hidden Object\", \"Deckbuilder\", \"Card Game\", \"Digital Board Game\", \"Football Soccer\", \"Basketball\", \"Sports General\", \"Rhythm Game\", \"Physics Sandbox\", \"Cozy\", \"Experimental\", \"Hybrid\", \"Uncategorized\", \"Action Adventure\", \"Stealth\", \"Monster Tamer\", \"Twin Stick Shooter\", \"Party Game\", \"Idle Clicker\", \"Musou Horde\", \"Wargame\", \"First Person Shooter\", \"Third Person Shooter\", \"Adult Visual Novel\", \"Arcade\", \"Arcade Action\", \"Vehicular Soccer\", \"VR Game\", \"Business Sim\"]\n\n## INSTRUCTIONS\n1. Analyze the game details.\n2. Pick the SINGLE Allowed Genre that best matches the game.\n3. Return ONLY a JSON array with EXACTLY ONE string, e.g., [\"Genre A\"].\n4. If the game does not fit ANY of the allowed genres, return [\"Uncategorized\"].\n5. STRICTLY NO OTHER TEXT."

}

]

}

Expected Result: [Looter ARPG]

Call Example II - Complete Prompt for Analysis (DeepSeek Reasoner)

{

"model": "deepseek-reasoner",

"temperature": 0,

"max_tokens": 4096,

"messages": [

{

"role": "system",

"content": "Role: You are the \"2miu Curator\", an elite, ruthless, and highly technical video game critic. Your job is to analyze games based on specific structural criteria, not just \"vibes\".\n\nCore Directives:\n1. Brutal Honesty: Do not sugarcoat failures. However, remain objective about successes even in flawed products.\n2. Context Isolation: Analyze the game ONLY based on the provided Description, Tags, and Metrics. Do not hallucinate features.\n3. Genre-Specific: You will be given a target Genre and 5 specific criteria. Evaluate the game strictly against these criteria.\n4. The Score Contextualization: A low User Review Score (< 50%) is a major red flag, BUT you must diagnose the cause.\n - If the score is low due to **Technical/Gameplay issues** (bugs, clunky controls), punish the relevant criteria mercilessly.\n - If the score is low due to **Monetization/Policy** (review bombing, battle pass greed) but the core game is functional, rate the Gameplay criteria HIGH (objectively) and punish the Value/Progression criteria.\n - Do NOT let \"Community Hate\" contaminate criteria like \"Graphics\" or \"Gunplay\" unless those specific aspects are bad.\n\nOutput Format:\nYou must respond with valid JSON only. No markdown formatting, no conversational filler. Do not calculate the Tier, just provide the scores.\nStructure:\n{\n \"criteria\": [\n {\n \"name\": \"Criterion Name From Input\",\n \"score\": 1-10,\n \"justification\": \"15-25 word explanation. Be specific.\",\n \"note\": \"Optional legacy field\"\n }\n ],\n \"summary\": \"30-50 word verdict. If scores are high, be poetic. If scores are low, be sarcastic and savage.\"\n}"

},

{

"role": "user",

"content": "## TARGET GAME ANALYSIS\n\n**Metadata:**\n- Title: Diablo IV\n- Developer: Blizzard Entertainment\n- Publisher: Blizzard Entertainment\n- Release Date: Jun 5, 2023\n- Early Access: no\n\n**The Data (Facts):**\n- Steam Description: \"Return to darkness. The endless battle between the High Heavens and the Burning Hells rages on as chaos threatens to consume Sanctuary. With ceaseless demons to slaughter, countless abilities to master, nightmarish Dungeons, and Legendary loot, this vast, open world brings the promise of adventure and devastation. Survive and conquer darkness—or succumb to the shadows.\"\n- Steam Tags (Sanitized): [Action, RPG, Hack and Slash, Loot, Multiplayer, Dark Fantasy, Open World, Character Customization, Co-op, Online Co-Op, PvP, Replay Value, Story Rich, Atmospheric, Action RPG]\n- Lifetime Review Score: 68% (based on 125,432 reviews)\n- Recent Review Score: 65%\n\n**Genre Context:**\nI have classified this game as: **Looter ARPG**\n\n**Analysis Task:**\nEvaluate the game based on these 5 specific criteria for Looter ARPG. Rate each from 1-10.\n\n **CRITICAL INSTRUCTION: TAG-BASED RELATIVITY & NUANCE**\nInterpret the criteria through the lens of Steam Tags to establish the correct baseline expectations:\n\n1. **The Context Rule**: Use Tags to calibrate the scale. (e.g. 'Repetitive' is fatal for a Story game, but expected for a 'Musou' or 'Diablo-like'. 'Simplicity' is a virtue for 'Cozy', a flaw for 'Grand Strategy').\n2. **The Execution Rule**: Judge the game by the standards of its specific micro-genre (e.g. 'Idle Clicker', 'Visual Novel'), not by general gaming standards. Did it succeed at what it TRIED to be?\n3. **Conflict Resolution**: If Tags contradict the assigned Genre (e.g. 'Relaxing' tag on a 'Survival Horror'), trust the **Genre** assignment and these specific Criteria over the user tags.\n\n **MANDATORY: CRITERIA DEFINITION ADHERENCE**\nYou must evaluate based ONLY on the specific definition provided below for each criterion. Do not use your own general definition of these terms.\n- If the criterion 'Graphics' says 'Evaluate Performance', you must evaluate FPS/Bugs, NOT Art Style.\n- Strict adherence to the provided description is required for consistency across the database.\n\n1. **Loot Satisfaction**: Do drops feel rewarding? Are \"jackpot\" moments exciting?\n2. **Build Diversity**: Is endgame theorycrafting deep? Can you respec easily?\n3. **Endgame Loop**: Is the max-level content varied? Are goals clear?\n4. **Combat Flow**: Is skill responsiveness high? Does destroying hordes feel good?\n5. **Player Respect**: Is monetization fair? Can you play offline/solo if desired?\n\n**Constraint Checklist:**\n- Analyze the Scores (Lifetime: 68%, Recent: 65%). Look for the context notes above regarding Redemption Arcs or Deterioration.\n- Is a low score due to bad gameplay or review bombing? If gameplay is polished, rate gameplay criteria high regardless of the score.\n- Are the tags consistent with the genre?\n\nGenerate the analysis JSON now."

}

]

}

Expected Result:

{

"criteria": [

{

"name": "Loot Satisfaction",

"score": 7,

"justification": "Legendary drops feel impactful with clear power spikes, but itemization can feel repetitive with too many incremental upgrades rather than exciting discoveries.",

},

{

"name": "Build Diversity",

"score": 8,

"justification": "Deep skill trees and paragon boards allow for meaningful build experimentation. Respec costs are reasonable, encouraging theorycrafting without punishing mistakes.",

},

{

"name": "Endgame Loop",

"score": 6,

"justification": "Nightmare Dungeons and world events provide variety initially, but the loop becomes grindy. Seasonal content helps but core activities lack long-term engagement.",

},

{

"name": "Combat Flow",

"score": 9,

"justification": "Combat is responsive and satisfying. Skill animations feel weighty, horde clearing is visceral, and the feedback loop of killing demons is consistently engaging.",

},

{

"name": "Player Respect",

"score": 5,

"justification": "Battle pass and cosmetic shop exist but don't affect gameplay. However, always-online requirement prevents true solo play, and seasonal model creates FOMO pressure.",

}

],

"summary": "A polished ARPG with exceptional combat and deep build systems, but the endgame loop grows stale and always-online requirement undermines player agency. The loot chase is satisfying but lacks the dopamine hits of its predecessors."

}

Point 3: Frontend

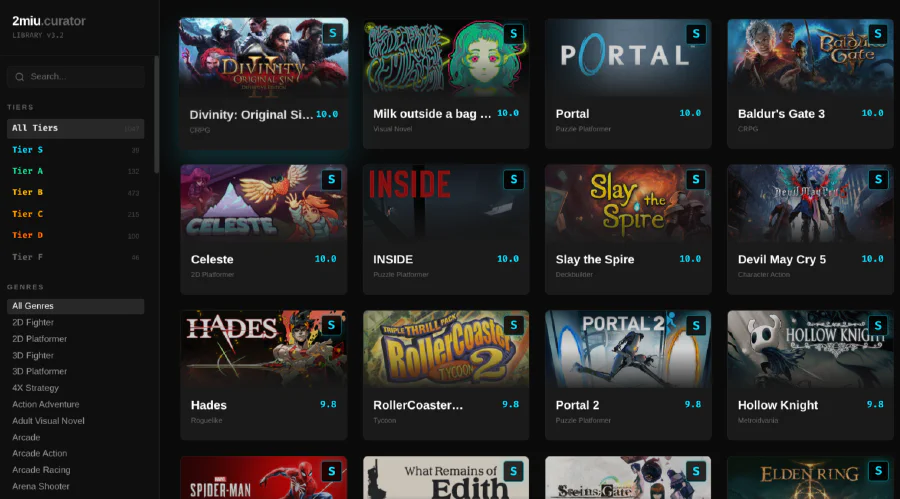

As I mentioned, I initially planned to use Wails but decided to stick with solidjs and build a web app instead. The core concept of the system has always been to create a tier list based on game genres or subgenres. It also needed two other features including a filter for S Tier and a game search. The search functionality is standard. On the other hand, the Tier filter allows me to see all the S Tier games in my library or even those in Tier F.

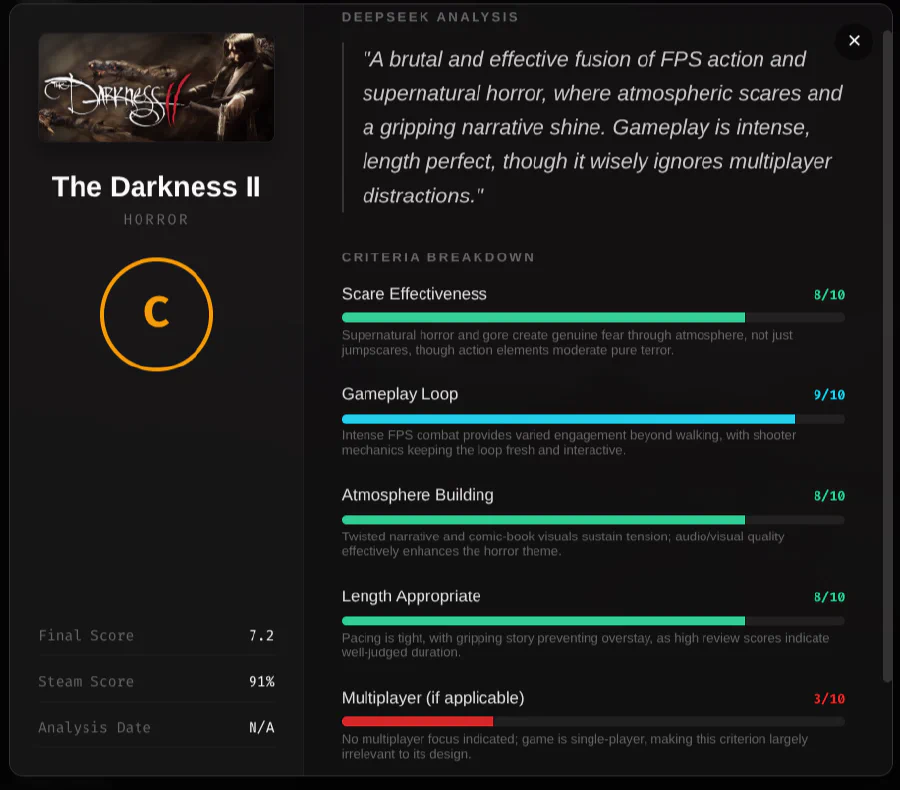

So how were the Tiers defined?

Easy!

| Tier | Score Range | Description |

|---|---|---|

| S | 9.5 - 10.0 | Exceptional |

| A | 9.0 - 9.49 | Excellent |

| B | 8.0 - 8.99 | Very good |

| C | 7.0 - 7.99 | Good |

| D | 6.0 - 6.99 | Fair |

| E | 5.0 - 5.99 | Weak |

| F | 0.0 - 4.99 | Poor |

Now that this is established we can talk more about the screens.

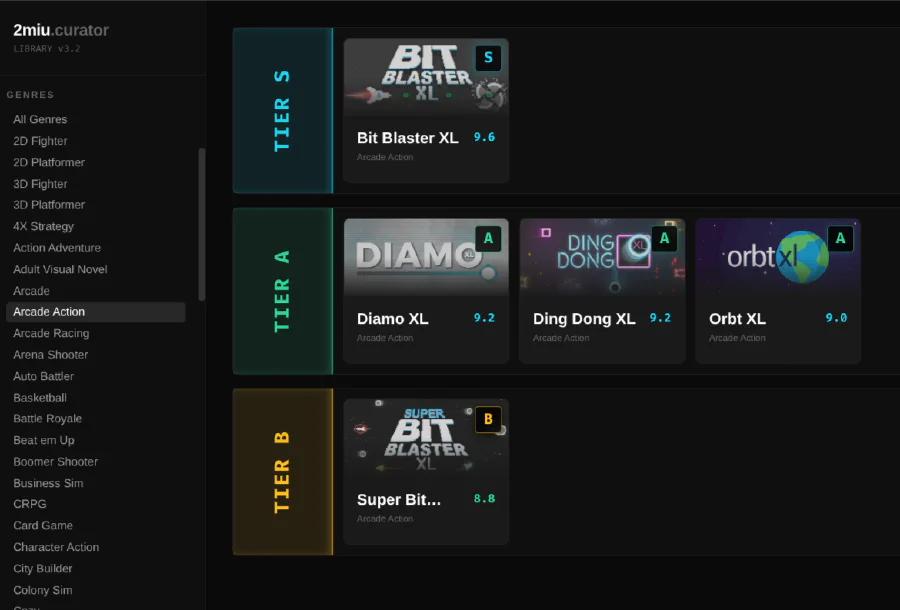

Main Screen - Tier List

The main screen of the application displays games organized in a tier list format, similar to what you would see in fighting game communities. When you select a genre from the sidebar, the system displays all analyzed games of that genre, organized by their Tier (S, A, B, C, D, F).

Each game card shows:

- The game’s cover image (header image from Steam)

- The game title

- The Tier badge (S, A, B, C, D, or F) in the top-right corner

- The platform logo (Steam or GOG) in the bottom-right corner

- The genre name

- The curator score (0-10 scale)

Games are automatically sorted within each tier by their score, with the highest-scoring games appearing first. Clicking on any game card opens a detailed modal showing the complete analysis, including all 5 criteria scores, justifications, and the AI-generated summary.

Genre Filter

The sidebar provides a comprehensive list of all 84 genres available in the system. By default, “All Genres” is selected, which shows games from every genre. When you click on a specific genre, the tier list updates to show only games classified under that genre.

This filtering mechanism is essential because, as I explained earlier, each genre has its own specific evaluation criteria. A game that might be Tier C in one genre would be Tier S in another, depending on how well it matches the expectations of that specific subgenre.

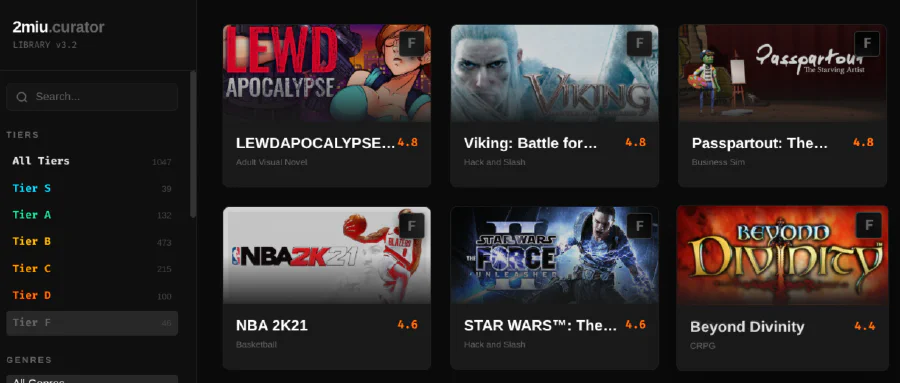

Tier Filter

In addition to genre filtering, the sidebar also provides a Tier filter. This allows you to view all games of a specific tier across all genres, or combine it with a genre filter to see, for example, all Tier S games in the “Soulslike” genre.

The tier filter is particularly useful for:

- Discovering the best games in my library (Tier S)

- Identifying games that might need re-evaluation (Tier F)

- Comparing games of similar quality across different genres

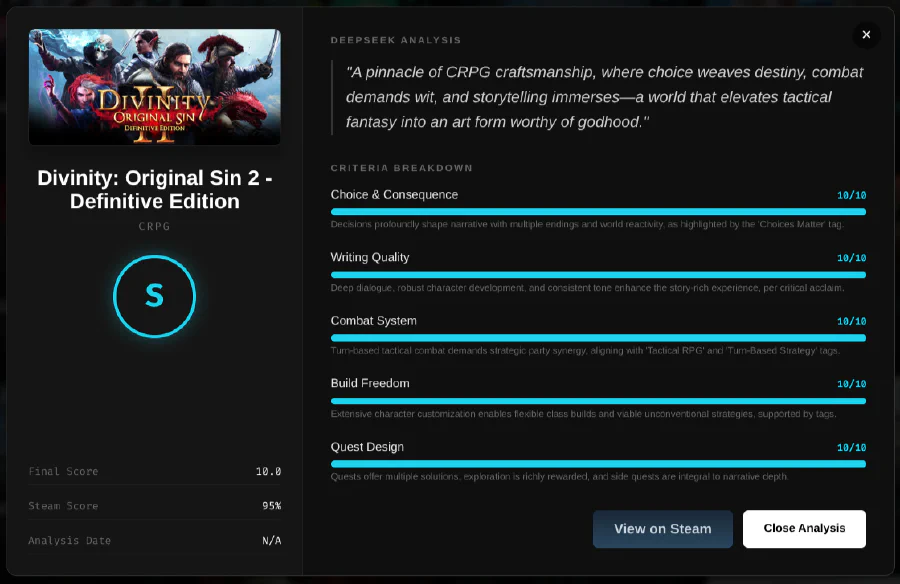

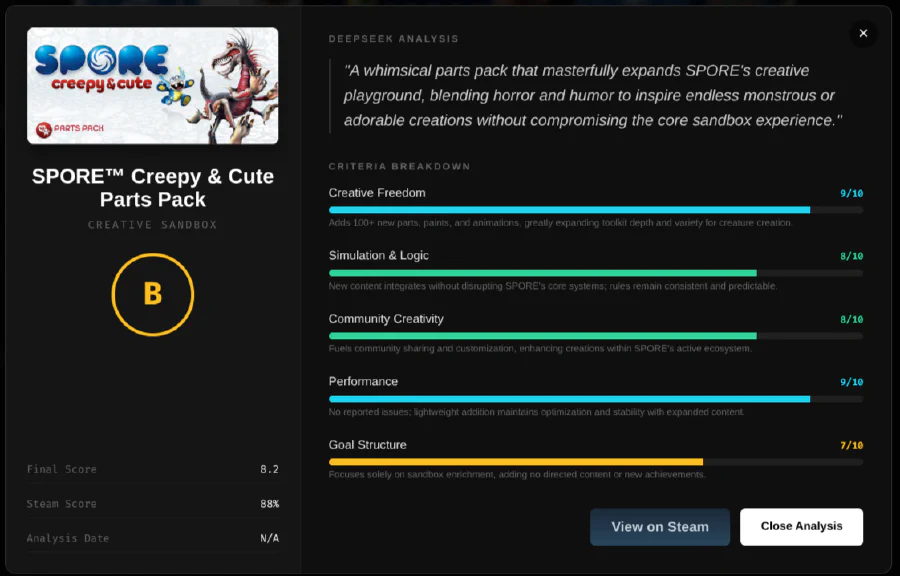

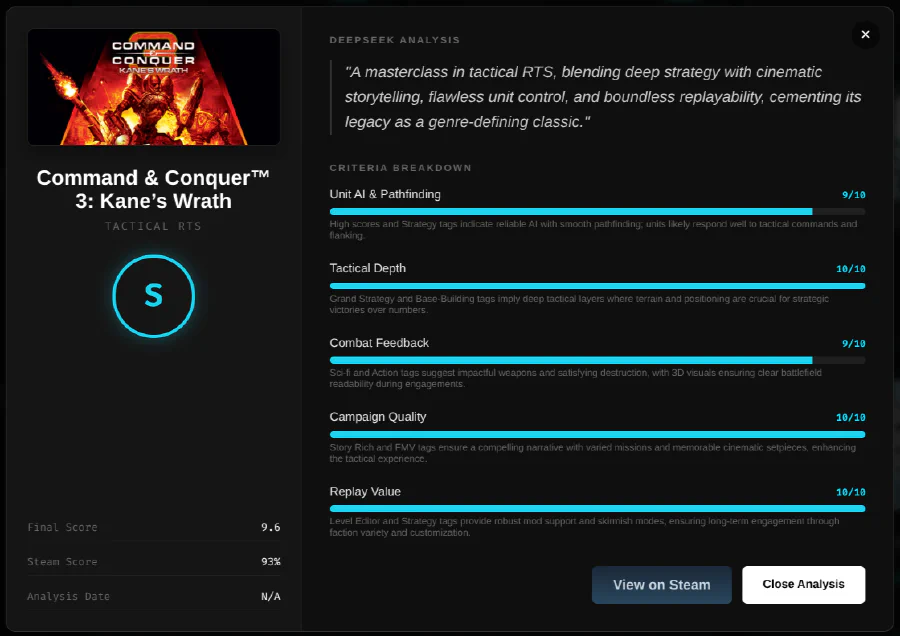

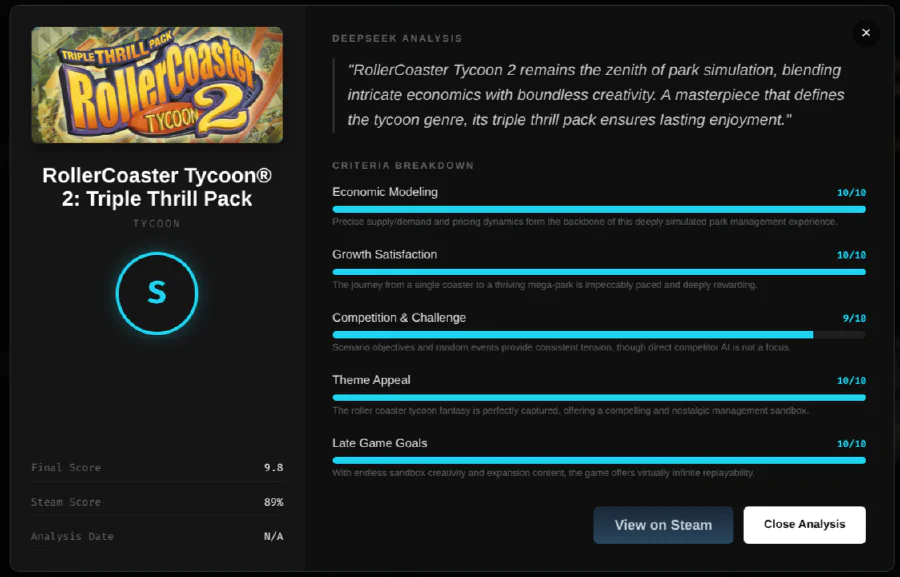

The Curation

This is the core of the project. This section contains the results of the curation performed by DeepSeek based on my specific criteria. With five categories analyzed, it features a paragraph summarizing the curator’s perspective in the classic Steam Curators style. You will also find the Curation Score, the Steam Score, and a link to access the game directly on Steam.

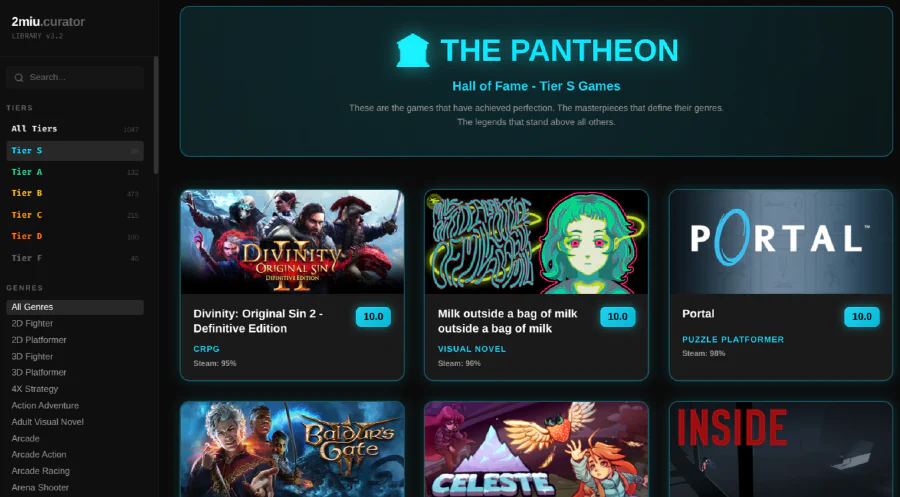

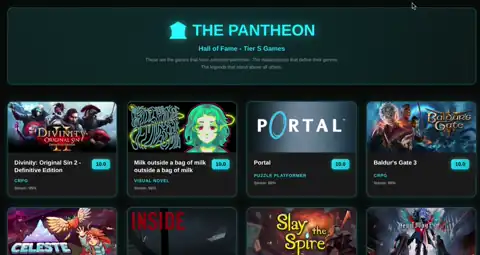

The Pantheon

The Pantheon is a special screen dedicated exclusively to Tier S games - the masterpieces that have achieved a score of 9.5 or higher. This is the Hall of Fame of my game library.

The Pantheon displays all Tier S games in a beautiful grid layout, sorted by score (highest first). Each card shows:

- The game cover with a golden glow effect

- The game title

- The exact score (e.g., 9.7)

- The genre classification

- The Steam review score for comparison

This screen serves as a curated collection of the absolute best games in my library, regardless of genre. It’s the place to go when you want to find the next masterpiece to play.

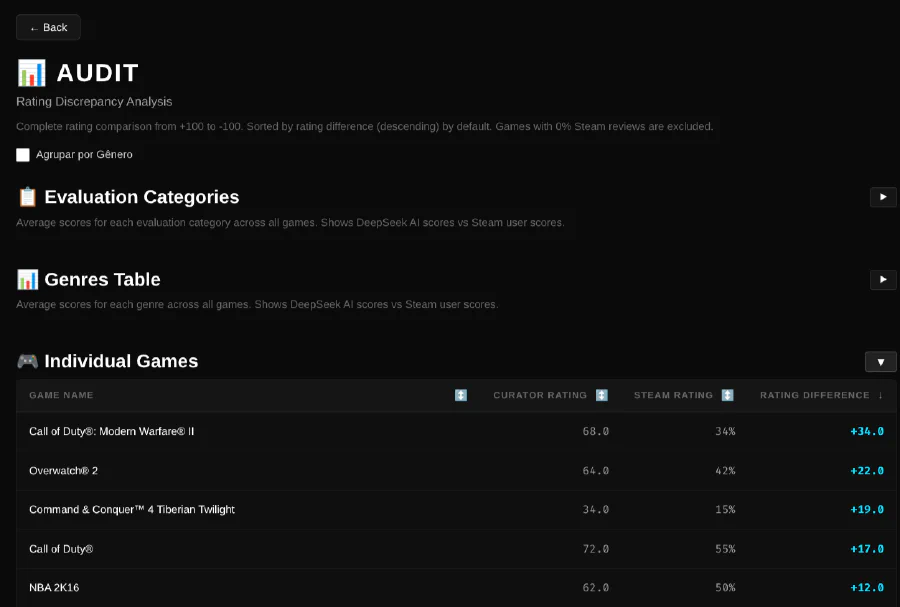

Audit Screen

The Audit screen is a powerful analytical tool that allows me to compare DeepSeek ratings with Steam’s user reviews. This screen provides several views:

Individual Games View: Shows all games with their curator rating (converted to 0-100 scale), Steam rating, and the difference between them. This helps identify:

- Games where the curator is more lenient than Steam users

- Games where the curator is harsher than the community

- Potential misclassifications or evaluation errors

Genre Groups View: Aggregates data by genre, showing average ratings for each genre. This reveals which genres tend to score higher or lower in the curation system compared to Steam.

Evaluation Categories View: Breaks down the analysis by the specific evaluation criteria (the 5 criteria per genre), showing which aspects of games tend to score better or worse.

The Audit screen is essential for maintaining quality and consistency in the curation system, allowing me to identify patterns and potential improvements in the evaluation criteria.

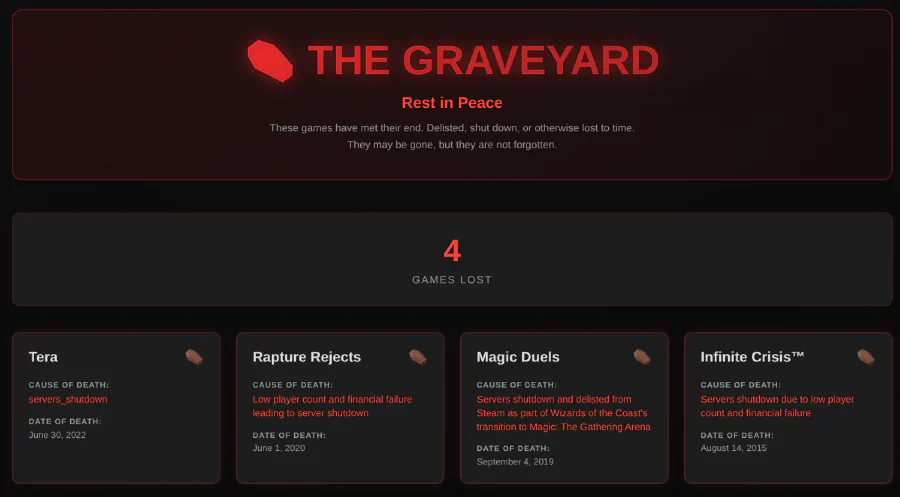

Graveyard Screen

The Graveyard is a memorial for games that have been delisted, shut down, or otherwise lost to time. These are games that can no longer be purchased or played, often due to:

- Server shutdowns (online-only games)

- Licensing issues

- Developer/publisher decisions

- Legal disputes

Each entry in the Graveyard shows:

- The game title

- The cause of death (e.g., “Servers shutdown”, “Delisted from Steam”, “Abandoned by developers”)

- The date of death (when the game became unavailable)

The Graveyard serves as a historical record of games that were once part of my library but are now inaccessible. It’s a reminder of the impermanence of digital media and the importance of preservation in the gaming industry.

These screens work together to provide a comprehensive view of the game library, allowing me to discover hidden gems, identify the best games to play, and maintain a curated collection that reflects my personal gaming preferences and standards.

Problems (and plenty of them!)

Claiming that the plan described above was executed 100% without issues is a fantasy that no developer has ever actually lived to this day. There were, in fact, many problems during the brief development of this application.

Problem number 1: The Genres

As I mentioned, the curation covers 84 genres. Was this the plan from the start? Far from it. Initially, there were 63 genres, which is already a lot. However, after reviewing the analysis results, I found some inconsistencies because certain games simply didn’t fit into any of the existing categories. Let’s look at the example below.

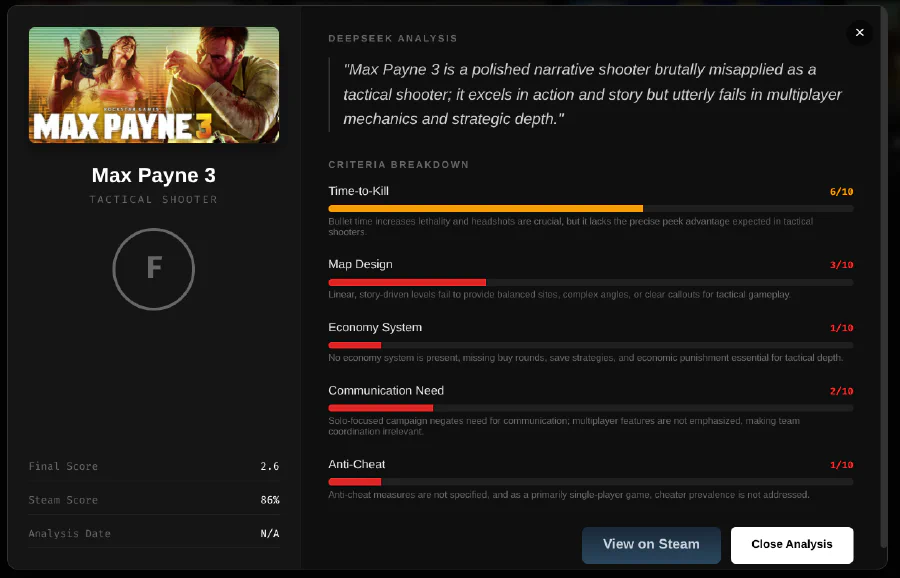

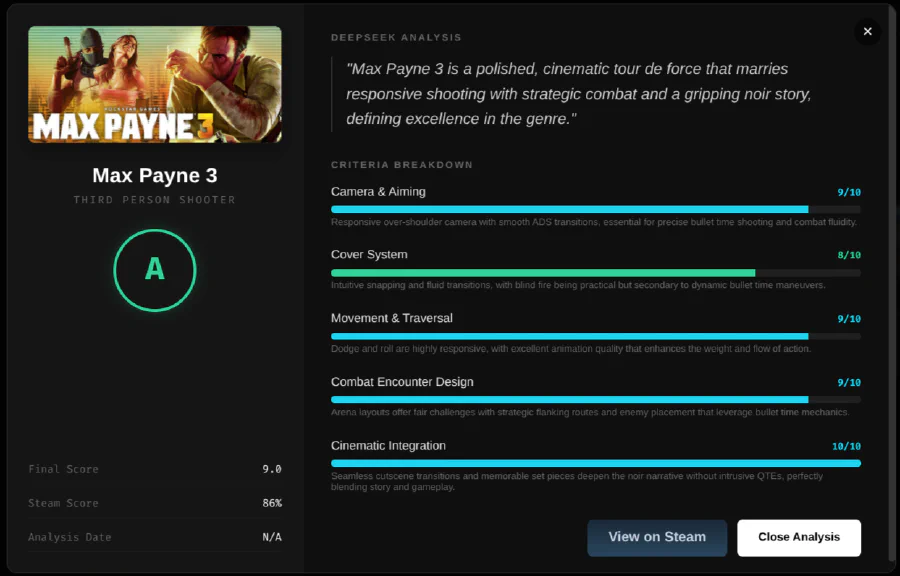

Since I avoided broad genres to focus on being specific, general labels like Action, Adventure, FPS, or Third Person Shooter were discarded. However, we then encountered games that didn’t fit into any of the defined subgenres. This is exactly what happened with Max Payne 3. With the available options, DeepSeek decided to categorize it as a Tactical Shooter. The result? Max Payne was judged as a mediocre Tactical Shooter, receiving a score of 2.6. The issue is that it isn’t a Tactical Shooter at all.

Because of this, after a detailed analysis of over 1000 games, I had to identify these anomalies, and a clear problem emerged. As I said, the games were being analyzed, but the “measuring stick” used for some of them didn’t match the game itself.

So yes, I had to adjust this manually. But honestly, who can successfully plan every possible game subgenre perfectly on the very first try?

Now Max Payne 3 has the correct score.

Problem number 2: The discrepancy in analyzed categories

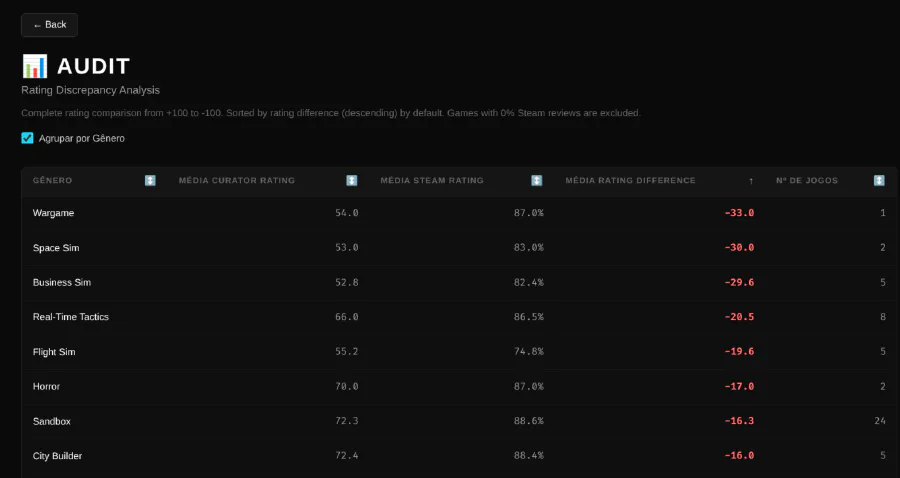

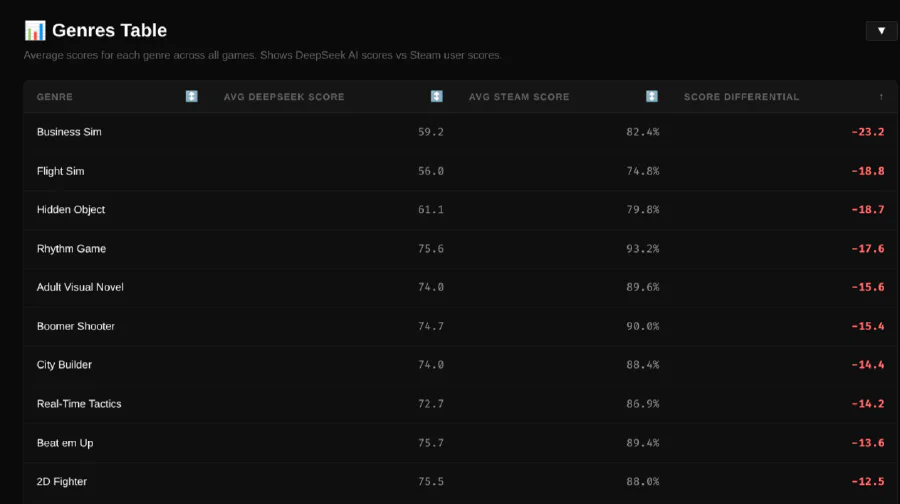

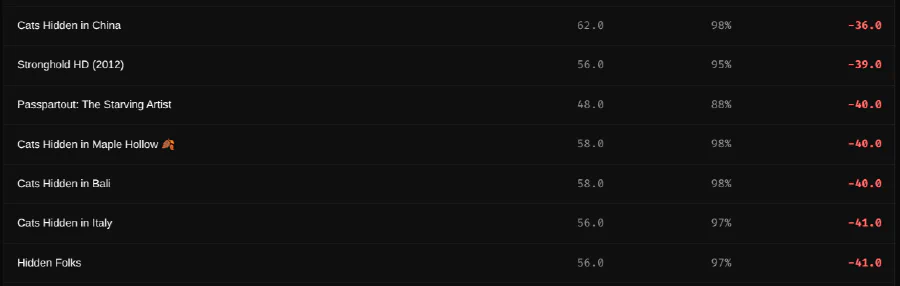

Another important metric to evaluate is the difference between the DeepSeek scores and the Steam scores. Here, we have two types of discrepancies worth analyzing: Genre vs. Steam score and Category vs. Steam score. Of course, the goal isn’t to match the AI’s score exactly to Steam’s because then the project wouldn’t make any sense. However, huge differences usually suggest problems.

Let’s start with the first one.

To calculate the variation rate, the formula is simple. Given the average values of all games in a genre:

(DeepSeek Score * 10) - Steam Score = Variation

If the variation is negative, DeepSeek (using my criteria) rated a game more negatively than the public. If the variation is positive, it rated the game more positively. The closer the result is to zero, the higher the consensus between my curation and Steam.

Positive variations are expected since a game might have negative reviews on Steam for specific reasons (like using AI assets, controversy with the creator, or a broken update) which hurts the game’s reputation but doesn’t necessarily affect its actual mechanics.

Negative variations, however, generally indicate a problem. Almost always, the problem is the same: a specific criterion I planned doesn’t make sense for that specific game.

From the image above, let’s take 3 examples: Horror, Flight Sim, and Business Sim. In the analysis of Genres vs. Steam Score, we have a considerably negative percentage. I needed to investigate what was happening.

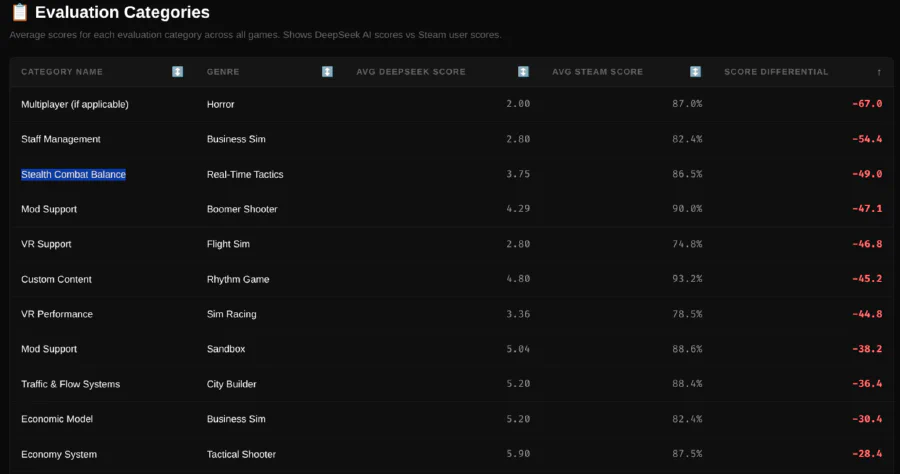

To do this, I performed the second type of analysis: Evaluated Criteria vs. Steam Score.

Here the scenario changes slightly. In the case of specific criteria or categories, high variations don’t always indicate a bug. For example, look at Basketball games (basically the 2K series). It might have a -24.5% variation in the Monetization criterion but a +20.5% in the On-Court Gameplay criterion. When you sum all the criteria, you get a +2.5% difference, which is a minimal variation. This perfectly explains what the 2K series is today: excellent gameplay with terrible monetization.

However, the genres identified as problematic in the previous analysis need to have their criteria examined here as well. Let’s go back to the genre examples mentioned earlier, where we can identify criteria that really don’t make sense.

Horror - Multiplayer Criterion. Variation of -67%

Business Sim - Staff Management Criterion. Variation of -54.4%

Flight Sim - VR Support Criterion. Variation of -46.8%

I think the problem becomes very clear with this analysis, right? Evaluating multiplayer in a horror game often doesn’t make sense (I made the mistake of focusing too much on Dead By Daylight). Staff management is usually not the core of a business sim where you typically just assign a generic role to an employee without dealing with laws, salary bonuses, or complex management. As for Flight Sims, while VR Support is a nice feature, it shouldn’t be a mandatory judging criterion per se.

The solution here was to review categories with variations above 25% (which is where I started identifying anomalies) and rethink the criteria being used.

With that, we arrived at the variations below. A maximum variation of genres of -23.2% is something totally acceptable. After doing a manual analysis of the largest variations, I concluded that the results make sense within MY CRITERIA.

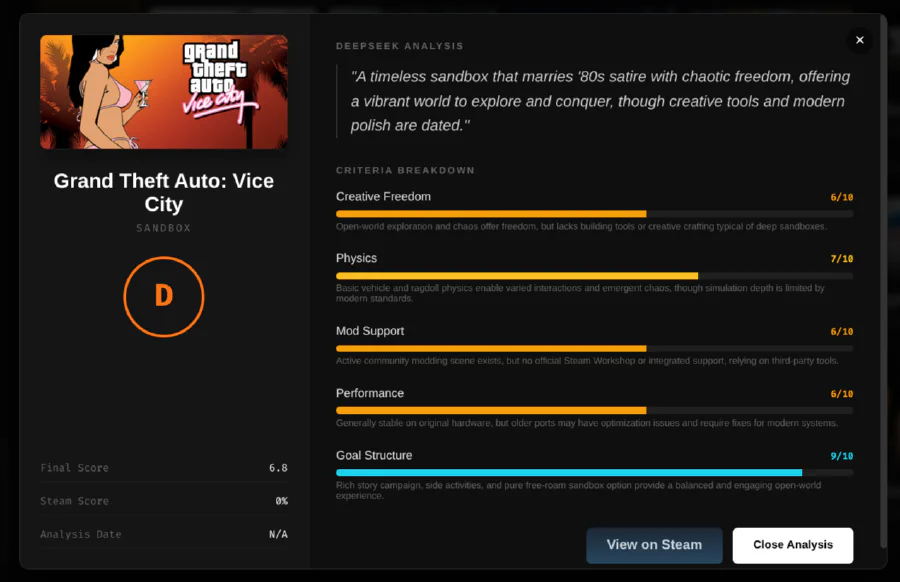

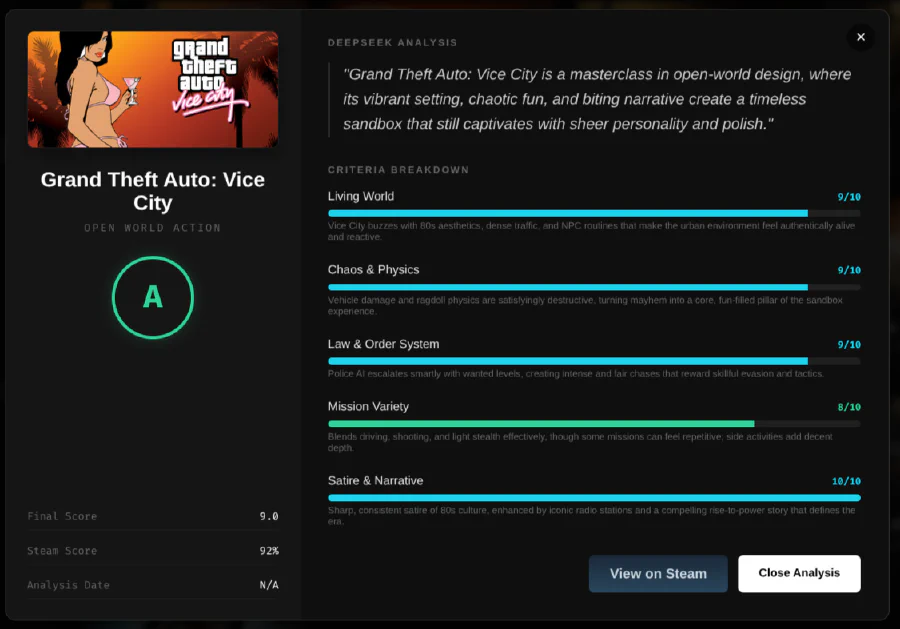

Problem number 3: The Genre Split

Some genres are simply too broad. This was the case with Sandbox.

When I initially created the genre list, I thought Sandbox would be a reasonable category. After all, it’s a well-known term in gaming, right? Well, the problem is that Sandbox encompasses games that go from GTA V to Spore. Yes, both are “sandbox” games in the sense that they give you freedom to do whatever you want. But the kind of freedom they offer is fundamentally different.

GTA V is about causing chaos in a realistic open world, stealing cars, shooting people, and experiencing a cinematic crime story. Spore is about creating creatures from scratch, evolving them, building civilizations, and conquering the galaxy. They share the “sandbox” label, but evaluating them with the same criteria would be absurd.

Imagine judging GTA V on “creature creation depth” or Spore on “wanted level mechanics.” The AI would be completely lost, and the scores would be meaningless.

The solution? Split the genre in two.

I created Open World Action for games like GTA, Saints Row, Watch Dogs, and similar titles where you run around a realistic (or semi-realistic) open world causing mayhem and following a story. The criteria here focus on things like world interactivity, mission variety, traversal fun, and emergent chaos.

On the other hand, Creative Sandbox was born for games like Spore, Minecraft, Terraria, and similar titles where the focus is on creation, building, and expressing yourself through game mechanics. Here, the criteria shifted to tool depth, creative freedom, progression systems, and community features.

This single split immediately fixed dozens of miscategorized games. The scores started making sense again, and games were finally being judged by what they actually are, not by what a vague umbrella term suggests.

Problem number 4: The “Beloved” Games I Don’t Agree With

Here’s where things get personal. And a bit spicy.

Steam scores are democratic. Anyone can leave a review. And sometimes, democracy has… questionable taste.

Let me give you two examples that made me question humanity.

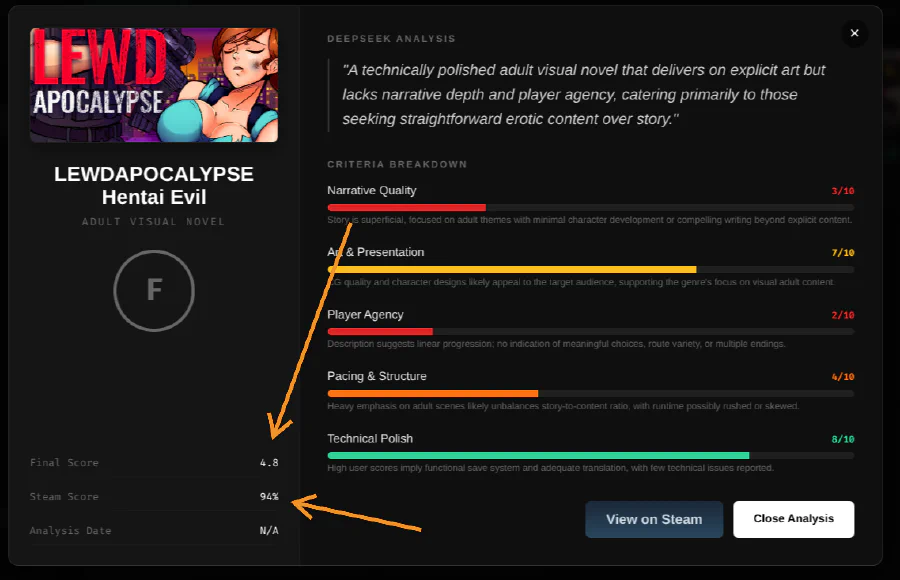

Exhibit A: Hentai Games.

If you’ve ever browsed Steam’s top-rated games with no filters, you’ve probably noticed something peculiar. Adult visual novels and hentai games often have overwhelmingly positive reviews. We’re talking 95%+ positive scores. Higher than some actual masterpieces of game design.

Why? Well, let’s just say there’s a very dedicated legion of… enthusiasts who rate these games with one hand while the other is… occupied elsewhere. These individuals will give a 10/10 to any game that shows anime breasts, regardless of whether the actual game has any substance, gameplay, or even basic quality control.

My criteria don’t care about your post-nut clarity rating. I evaluate Adult Visual Novels based on narrative quality, character development, art consistency, player agency, and pacing. If the story is garbage and the “gameplay” is just clicking through badly translated text to reach the next NSFW scene, the score will reflect that. Sorry, gentlemen of culture. The 2miu Curator has standards.

Exhibit B: Hidden Object Games.

Look, I understand that Hidden Object games have their audience. Usually, it’s people who want a relaxing experience, something to unwind with after a long day. And that’s perfectly valid.

But here’s my problem: these games are often too simple for my taste. The challenge is minimal, the mechanics are repetitive, and the “puzzles” barely qualify as such. You click on objects hidden in a cluttered scene. That’s it. That’s the whole game. Maybe there’s a mediocre mystery plot to tie it together.

Yet on Steam? These games often have Very Positive reviews. The audience loves them. They’re comfort food gaming.

My criteria for Hidden Object games evaluate things like scene design, hint systems, puzzle integration, and narrative hooks. Most of these games score around Tier C or D in my system. Not because they’re bad at what they do, but because what they do simply doesn’t impress me.

This is the point where I must remind you: this is MY curation. My criteria. My taste. If you love Hidden Object games, more power to you. But in the 2miu Curator universe, they’re not going to win any awards.

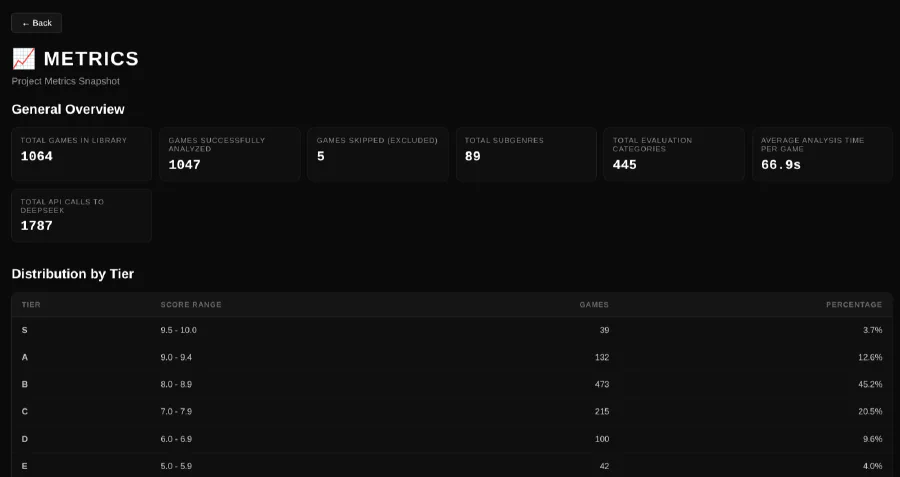

The Numbers: Project Metrics

Before diving into the challenges, let’s take a moment to appreciate the scale of this absurd endeavor. Numbers don’t lie, and these numbers tell the story of a weekend project that got slightly out of hand.

General Overview

| Metric | Value |

|---|---|

| Total Games in Library | 1,064 |

| Games Successfully Analyzed | 1,047 |

| Games Skipped (Excluded) | 5 |

| Total Subgenres | 89 |

| Total Evaluation Categories | 445 |

| Average Analysis Time per Game | ~66.9 seconds |

| Total API Calls to DeepSeek | 1,787 |

445 handcrafted evaluation categories. 89 subgenres. Over a minute per game for deep analysis. The deepseek-reasoner model doesn’t rush its judgments, and honestly, I respect that. Quality takes time.

Distribution by Tier

Here’s how my library shakes out after the curation:

| Tier | Score Range | Games | Percentage |

|---|---|---|---|

| S | 9.5 - 10.0 | 39 | 3.7% |

| A | 9.0 - 9.49 | 132 | 12.6% |

| B | 8.0 - 8.99 | 473 | 45.2% |

| C | 7.0 - 7.99 | 215 | 20.5% |

| D | 6.0 - 6.99 | 100 | 9.6% |

| E | 5.0 - 5.99 | 42 | 4.0% |

| F | 0.0 - 4.99 | 46 | 4.4% |

The distribution tells an interesting story. Nearly half of my library (45.2%) lands in Tier B - “Very Good” territory. This makes sense: over a decade of Steam sales, I’ve accumulated games that looked promising enough to buy but never urgent enough to play. They’re not masterpieces, but they’re not garbage either. They’re the eternal backlog.

The real treasures? 39 Tier S games - the Pantheon. Less than 4% of my library achieved masterpiece status. Meanwhile, 46 games sit in Tier F, the digital equivalent of buyer’s remorse. At least now I know which ones to avoid.

Top 10 Genres by Game Count

| Rank | Genre | Games |

|---|---|---|

| 1 | Action Adventure | 53 |

| 2 | Puzzle | 44 |

| 3 | Survival Horror | 42 |

| 4 | Tactical RTS | 42 |

| 5 | Hack and Slash | 34 |

| 6 | Point and Click | 31 |

| 7 | First Person Shooter | 29 |

| 8 | Puzzle Platformer | 26 |

| 9 | Boomer Shooter | 24 |

| 10 | Open World RPG | 24 |

Apparently, my gaming taste is a chaotic blend of genres. Action Adventure and Puzzle games dominate, but Survival Horror sitting at #3 with 42 games reveals a masochistic streak I wasn’t fully aware of. The strong presence of Tactical RTS and Point and Click games is a direct consequence of growing up in the golden era of PC gaming. And yes, 24 Boomer Shooters. DOOM and its descendants have a permanent place in my heart.

Tier S Champions by Genre

Not all genres are created equal. Some genres have multiple Tier S representatives, while others have none. Here are the genres that produced masterpieces:

| Genre | Tier S Games |

|---|---|

| CRPG | 4 |

| Puzzle Platformer | 3 |

| Visual Novel | 3 |

| Immersive Sim | 2 |

| Roguelite | 2 |

| Soulslike | 2 |

| 2D Platformer | 1 |

| Action Adventure | 1 |

| Arcade Action | 1 |

| Boomer Shooter | 1 |

CRPGs lead the pack with 4 Tier S titles. No surprises there - the genre has been experiencing a renaissance, and games like Disco Elysium, Baldur’s Gate 3, and Divinity: Original Sin 2 are simply built different. Puzzle Platformers and Visual Novels tied at 3 each, proving that you don’t need massive budgets or photorealistic graphics to achieve greatness.

Meanwhile, genres like Hidden Object (0 Tier S), Idle Clicker (0 Tier S), and Adult Visual Novel (0 Tier S - shocking, I know) remain masterpiece-free zones. At least according to my criteria.

The Graveyard Stats

| Metric | Value |

|---|---|

| Total Dead Games | 4 |

| Server Shutdowns | 3 |

| Delisted from Steam | 1 |

| Abandoned (Unplayable) | 0 |

Only 4 games in my library are now unplayable. That’s 0.4% of my collection lost to the void. A small number, but each one is a reminder that digital ownership is an illusion, and that always-online requirements are a curse upon gaming. Three of these died because their servers were shut down. They didn’t fail because they were bad games - they failed because someone decided to flip a switch.

Evaluation Categories Breakdown

Remember those 445 handcrafted evaluation categories? Here’s how they break down:

| Category Type | Count | Percentage |

|---|---|---|

| Gameplay/Mechanics | 317 | 71.2% |

| Progression/Reward | 38 | 8.5% |

| Player Experience | 36 | 8.1% |

| Design/Aesthetics | 27 | 6.1% |

| Technical/Meta | 27 | 6.1% |

The overwhelming emphasis on Gameplay/Mechanics (71.2%) is deliberate and reflects my personal philosophy: a game can have mediocre graphics and a forgettable story, but if the core gameplay loop is satisfying, it’s worth playing. Conversely, the most beautiful game in the world is worthless if playing it feels like a chore.

When I created those 445 criteria across 89 genres, I wasn’t asking “Does this game look pretty?” or “Is the soundtrack memorable?”. I was asking “Is it fun to play? Does the core loop work? Does the gameplay respect my time?”. That’s why Combat Flow, Map Design, Build Diversity, and similar mechanics-focused criteria dominate the evaluation system.

Challenges the Project Doesn’t Solve

Let’s be honest: no system is perfect, and this project has its limitations. There are certain challenges that, despite my best efforts, remain unsolved.

The Uncategorizable Games

Some games simply refuse to fit into any genre. They’re too weird, too experimental, or too unique to be judged by any standard criteria.

Take Bully (or Canis Canem Edit, for the Europeans). Is it an Open World Action game? Kind of. Is it a Life Sim? Sort of. Is it a Beat ’em Up? Partially. Is it a High School Simulator? Maybe? The game blends so many elements that no single genre captures its essence. DeepSeek will pick something, but whatever it picks will feel slightly wrong.

Or consider Passpartout: The Starving Artist. You play as a French artist painting your own masterpieces and trying to sell them to pretentious art connoisseurs while managing your wine and baguette addiction. Is it a Business Sim? A Creative Sandbox? An Art Simulator? A Tycoon? A comedy game about French stereotypes? Yes. All of it. None of it. Good luck finding a genre that captures that.

These games end up either in Hybrid (a catch-all category I created for exactly this problem) or Uncategorized (when even Hybrid feels like a stretch). The analysis for these games should be taken with a grain of salt. They’re not bad games; they’re just impossible to evaluate under a standardized system.

Multi-Genre Masterpieces

Related to the above, some games are genuinely excellent because they blend multiple genres. But my system evaluates them as one thing or another, never both.

A game that’s 50% Metroidvania and 50% Roguelite will be judged as either a Metroidvania (potentially missing what makes the roguelite elements great) or a Roguelite (potentially ignoring the brilliant map design). The score will be technically accurate for the chosen genre but might not reflect the full picture.

This is a fundamental limitation of any genre-based evaluation system. The only solution would be to allow multiple genre assignments with weighted criteria, but that would exponentially increase complexity. For a weekend project, I decided simplicity wins.

The Project’s True Purpose

I want to be very clear about something: the goal of this project is NOT to be a definitive game analyzer.

This is not a replacement for reading reviews, watching gameplay videos, or forming your own opinions. The 2miu Curator is not trying to tell you what’s objectively good or bad.

The real purpose is much simpler: to help me find games worth trying.

With over 1000 games in my library, I will never have time to play them all. I need a filter. A way to say, “Hey, among all these games you bought on sale and forgot about, these ones might actually be worth your time.”

And you know what? It works.

Let me give you two examples.

Command & Conquer™ 3: Kane’s Wrath received a Tier S rating. This is a game I bought in some bundle years ago and never even installed. Real-Time Strategy isn’t my primary genre. I would have never played this game organically. But now? Now I’m curious. A Tier S in my own curation system? Maybe I should give it a shot.

RollerCoaster Tycoon 2 is another Tier S surprise. I have vague childhood memories of the original, but I never committed to playing the sequel. It’s been sitting in my library for years, untouched. The curator says it’s exceptional in the Tycoon category. Time to build some roller coasters, I guess.

These are the discoveries that make the project worthwhile. Not validating games I already knew were great, but surfacing hidden gems I would have otherwise ignored.

Validation: The Masterpieces

Of course, the system would be worthless if it failed to recognize obvious quality. So I was relieved (and honestly a bit proud) when the analysis confirmed what any gamer already knows.

Celeste? Tier S.

Hollow Knight? Tier S.

Elden Ring? Tier S.

Hades? Tier S.

Disco Elysium? Tier S.

These games aren’t surprises. They’re validation. When your AI-powered curation system correctly identifies universally acclaimed masterpieces as masterpieces, you know the criteria are working. The system isn’t broken. It’s not randomly assigning scores. There’s actual logic behind the evaluations.

And that gives me confidence in the surprises. If the system is right about Hollow Knight, maybe it’s also right about that obscure game I’ve never heard of that somehow landed in Tier S.

Conclusion: The Editor-in-Chief of My Own Magazine

Let me take you back to where we started: a kid reading Hobby Consolas and Playmania, fascinated by game analysis and review scores.

I never became a game journalist. I never worked for a gaming magazine. But with this project, I kind of became something similar: the Editor-in-Chief of my own personal gaming publication.

Think about it. In traditional game journalism, the editor-in-chief defines the editorial line. They establish the criteria, the standards, the philosophy of how games should be evaluated. Then they hire journalists to write the actual reviews following those guidelines.

That’s exactly what I did here.

DeepSeek writes the text. It generates the scores. It produces the justifications and summaries. But the soul of every analysis is mine. Every criterion was handcrafted by me. Every evaluation parameter reflects my personal gaming philosophy. What I value in a Metroidvania. What I expect from a Looter ARPG. What makes a Soulslike great.

The AI is my journalist. I am the editorial line.

In the end, those 420 manually created evaluation categories aren’t just data points. They’re a manifesto of my gaming taste, codified into a system that can analyze over 1000 games in ways I never could manually.

It’s 2026, and I finally have my own Hobby Consolas. It’s digital, it’s AI-powered, and it only reviews the games I own. But that kid who used to stop by the newsstand every month would be pretty impressed.

The .2miu Curator is a personal project and is not affiliated with Steam, Valve, DeepSeek, or any of the games mentioned. All opinions expressed are my own, filtered through an AI that has no choice but to agree with me. That’s the beauty of being the Editor-in-Chief.

Post-Credits Scene: The Command Line Arsenal

For the nerds who stayed until the end.

You didn’t think I’d let you leave without showing off the CLI, did you? Here’s every command the .2miu Curator supports. Feel free to imagine the satisfying terminal output.

Core Commands

./curator sync # Sync Steam library

./curator analyze # Analyze pending games

./curator status # Show processing progress

./curator genres # List available genres

./curator check-excluded # Check excluded games

./curator check-game --id=<id> # Check specific game

./curator audit --f <file> # Process audit file

./curator deduplicate-analyses # Remove duplicate analyses

./curator move-to-graveyard --f <file> # Move games to graveyard

./curator analyze-graveyard # Analyze graveyard games

Analyze Flags

For when you need surgical precision in your curation:

./curator analyze --id=220 # Analyze by App ID

./curator analyze --id=220,400,570 # Analyze multiple games

./curator analyze --genre="Soulslike" # Re-analyze entire genre

./curator analyze --all # Re-analyze everything (grab a coffee)

./curator analyze --games 10 # Limit to N games

./curator analyze --zero-reviews # Re-analyze games with 0% reviews

Sync Flags

./curator sync --games 100 # Limit sync to N games

./curator sync --force-recreate # WARNING: Deletes ALL data

That --force-recreate flag? I’ve used it exactly once. By accident. At 2 AM. After 800 games had already been analyzed.

Learn from my mistakes.